Abstract

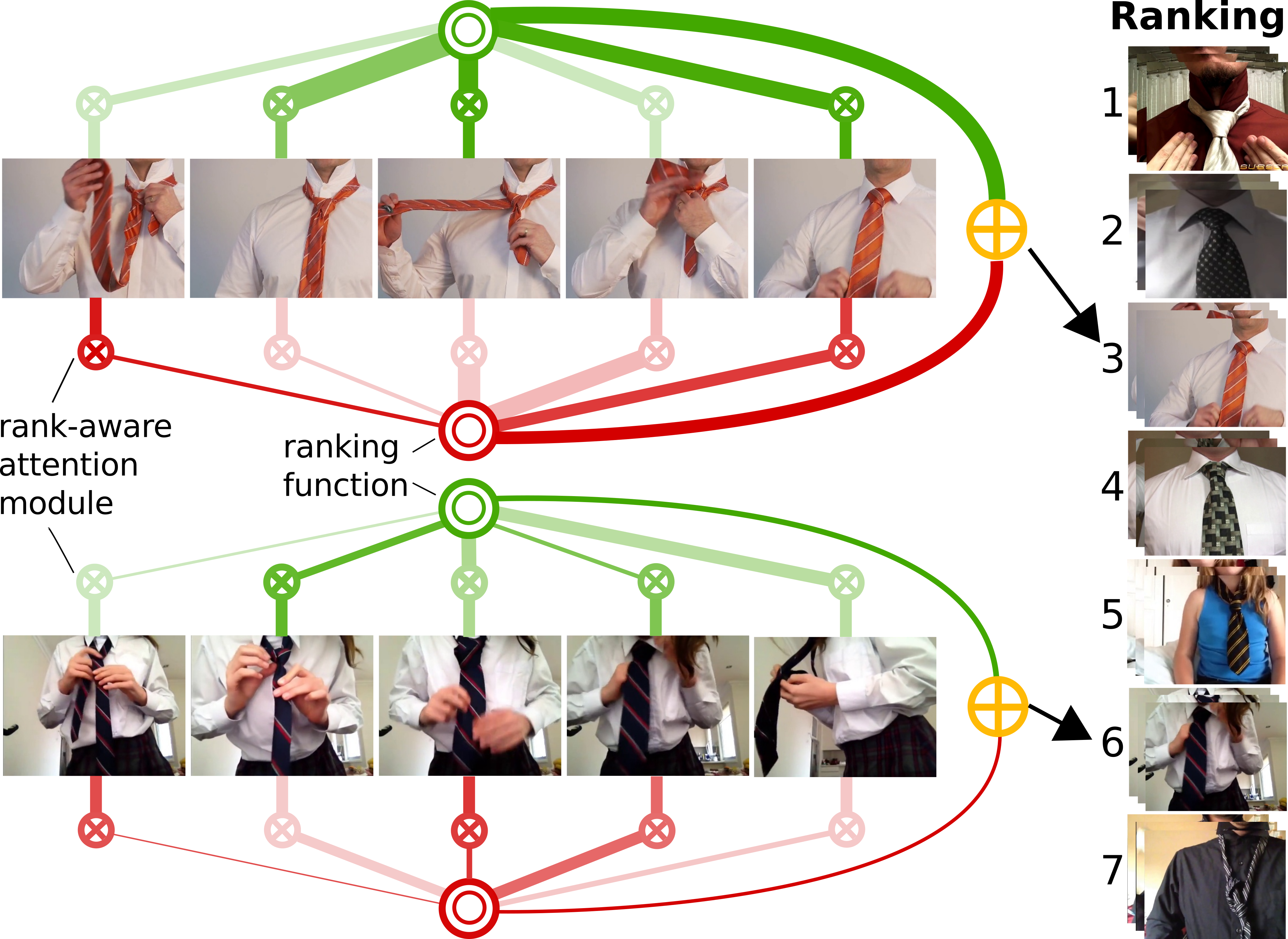

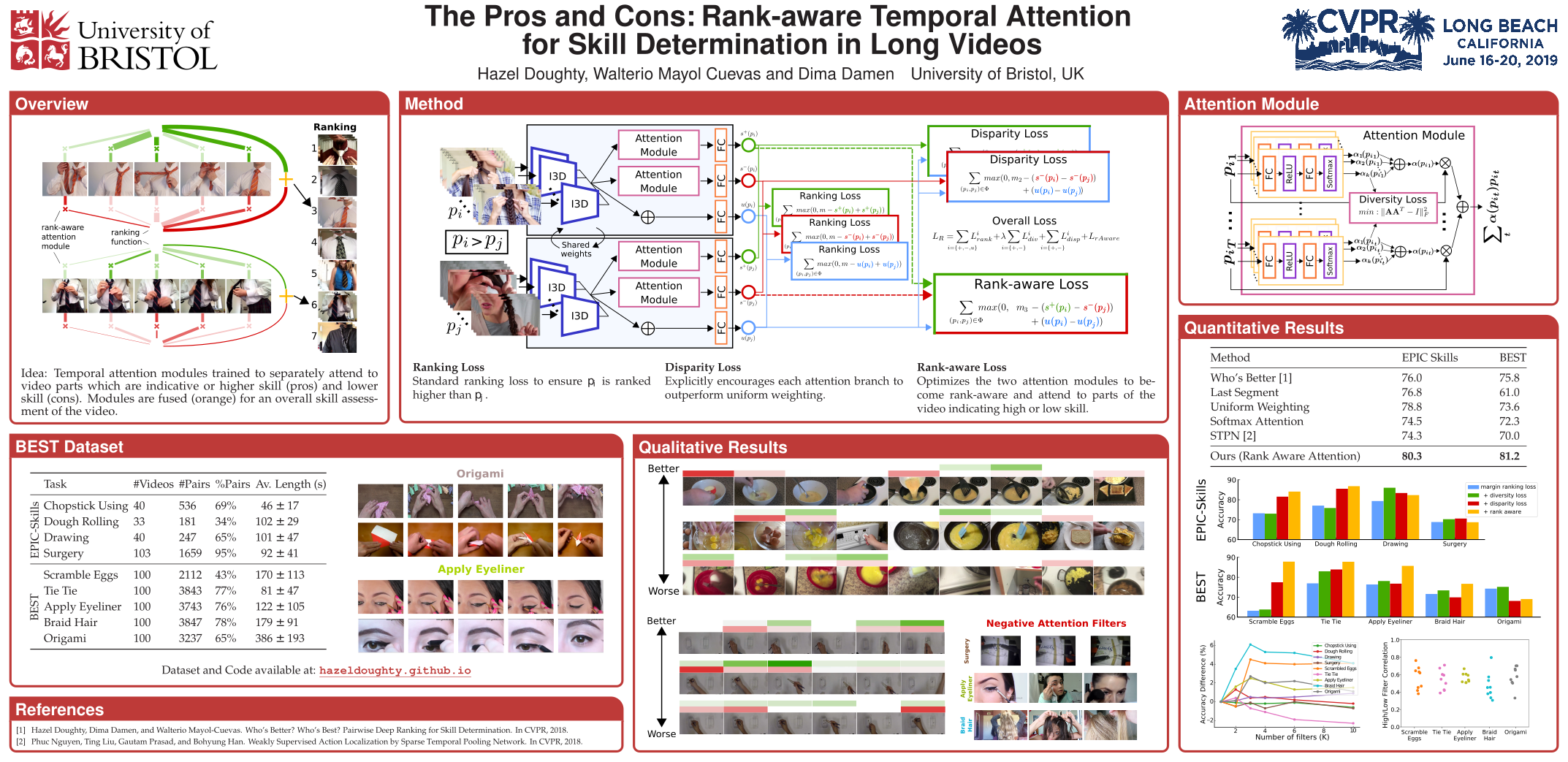

We present a new model to determine relative skill from long videos, through learnable temporal attention modules. Skill determination is formulated as a ranking problem, making it suitable for common and generic tasks. However, for long videos, parts of the video are irrelevant for assessing skill, and there may be variability in the skill exhibited throughout a video. We therefore propose a method which assesses the relative overall level of skill in a long video by attending to its skill-relevant parts.

Our approach trains temporal attention modules, learned with only video-level supervision, using a novel rank-aware loss function. In addition to attending to task-relevant video parts, our proposed loss jointly trains two attention modules to separately attend to video parts which are indicative of higher (pros) and lower (cons) skill. We evaluate our approach on the EPIC-Skills dataset and additionally annotate a larger dataset from YouTube videos for skill determination with five previously unexplored tasks. Our method outperforms previous approaches and classic softmax attention on both datasets by over 4% pairwise accuracy, and as much as 12% on individual tasks. We also demonstrate our model’s ability to attend to rank-aware parts of the video.

Poster

Downloads

- Paper [PDF] [ArXiv]

- Supplementary [Video]

- Code and data [Coming soon]

Bibtex

@article{Doughty_2019_CVPR,

author = {Doughty, Hazel and Mayol-Cuevas, Walterio and Damen, Dima},

title = {{T}he {P}ros and {C}ons: {R}ank-aware {T}emporal {A}ttention for {S}kill

{D}etermination in {L}ong {V}ideos},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2019}

}

Related

Acknowledgements

This research is supported by an EPSRC DTP and EPSRC GLANCE (EP/N013964/1)